Decision Trees

The unreasonable power of nested decision rules.

Let's Build a Decision Tree

Let's pretend we're farmers with a new plot of land. Given only the

Diameter and Height of a tree trunk, we must determine if it's an

Apple, Cherry, or Oak tree.

To do this, we'll use a Decision Tree.

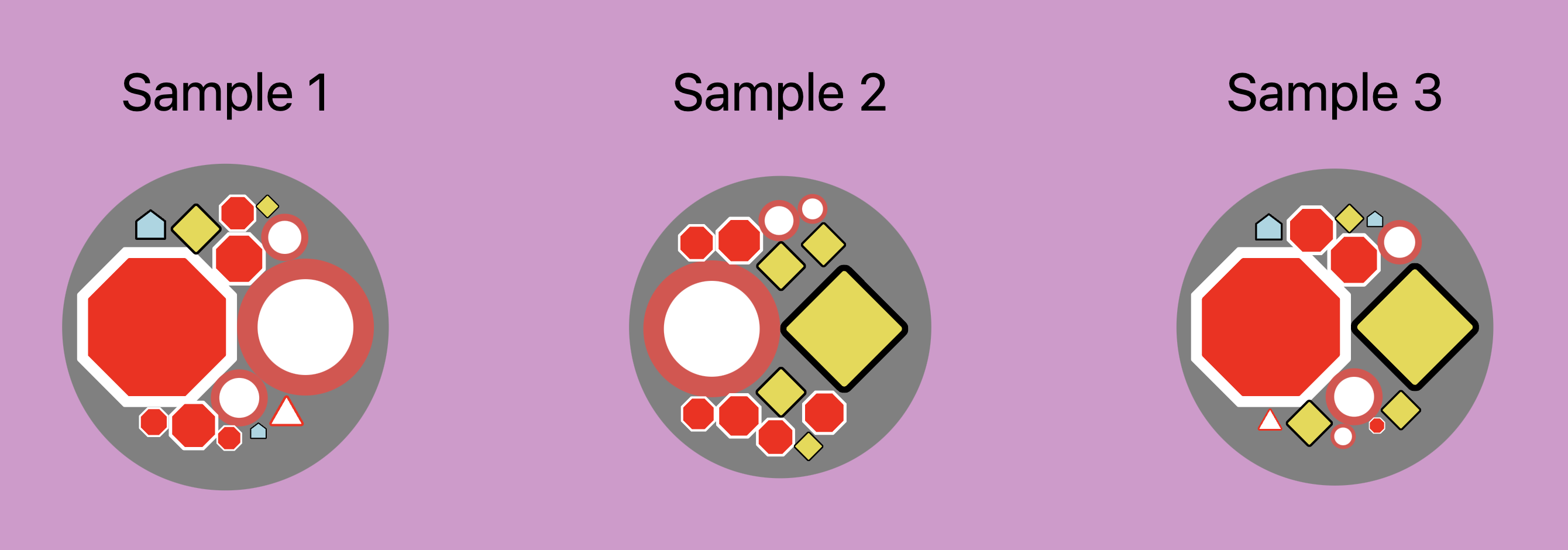

Start Splitting

Almost every tree with a

Diameter ≥ 0.45 is an Oak tree! We can assume,

that any other tree in that region

will also be one.

This first

decision node will act as our

root node. We'll draw a vertical line at

this Diameter and classify everything above it as Oak (our first

leaf node), and continue to partition our

remaining data on the left.

Split Some More

We continue along, hoping to split our plot of land. Creating a new

decision node at

Height ≤ 4.88 leads to a nice section

of Cherry trees, so we partition our data there.

Our Decision Tree updates accordingly, adding a new

leaf node for Cherry.

And Some More

We are now left with many

Apple and some Cherry trees.

No problem: we'll partition to separate the Apple trees a bit better.

Once again, our Decision Tree updates accordingly.

And Yet Some More

The remaining region just needs a further horizontal division and

boom - our job is done! We've obtained an optimal set of nested

decisions.

That said, some regions still enclose a few misclassified points.

Should we continue splitting, partitioning into smaller sections?

Hmm...

Don't Go Too Deep!

Going deep will complicate our tree by learning from the noise resulting in overfiting.

Does this ring familiar?

It is the well known The

Bias Variance Tradeoff,

so we'll stop here.

We're done! We can pass new data

to classify them as either an Apple, Cherry, or Oak

tree!

Welcome to Decision Tree

101

Welcome to Decision Tree

101